fork from github/youtube-local

This commit is contained in:

32

.gitattributes

vendored

Normal file

32

.gitattributes

vendored

Normal file

@@ -0,0 +1,32 @@

|

|||||||

|

# Basic .gitattributes for a python repo.

|

||||||

|

|

||||||

|

# Source files

|

||||||

|

# ============

|

||||||

|

*.pxd text

|

||||||

|

*.py text

|

||||||

|

*.py3 text

|

||||||

|

*.pyw text

|

||||||

|

*.pyx text

|

||||||

|

|

||||||

|

*.html text

|

||||||

|

*.xml text

|

||||||

|

*.xhtml text

|

||||||

|

*.htm text

|

||||||

|

*.css text

|

||||||

|

*.txt text

|

||||||

|

|

||||||

|

*.bat text eol=crlf

|

||||||

|

|

||||||

|

# Binary files

|

||||||

|

# ============

|

||||||

|

*.db binary

|

||||||

|

*.p binary

|

||||||

|

*.pkl binary

|

||||||

|

*.pyc binary

|

||||||

|

*.pyd binary

|

||||||

|

*.pyo binary

|

||||||

|

|

||||||

|

# Note: .db, .p, and .pkl files are associated

|

||||||

|

# with the python modules ``pickle``, ``dbm.*``,

|

||||||

|

# ``shelve``, ``marshal``, ``anydbm``, & ``bsddb``

|

||||||

|

# (among others).

|

||||||

16

.gitignore

vendored

Normal file

16

.gitignore

vendored

Normal file

@@ -0,0 +1,16 @@

|

|||||||

|

__pycache__/

|

||||||

|

*$py.class

|

||||||

|

debug/

|

||||||

|

data/

|

||||||

|

python/

|

||||||

|

release/

|

||||||

|

youtube-local/

|

||||||

|

banned_addresses.txt

|

||||||

|

settings.txt

|

||||||

|

get-pip.py

|

||||||

|

latest-dist.zip

|

||||||

|

*.7z

|

||||||

|

*.zip

|

||||||

|

*venv*

|

||||||

|

moy/

|

||||||

|

__pycache__/

|

||||||

62

HACKING.md

Normal file

62

HACKING.md

Normal file

@@ -0,0 +1,62 @@

|

|||||||

|

# Coding guidelines

|

||||||

|

* Follow the [PEP 8 guidelines](https://www.python.org/dev/peps/pep-0008/) for all new Python code as best you can. Some old code doesn't follow PEP 8 yet. This includes limiting line length to 79 characters (with exception for long strings such as URLs that can't reasonably be broken across multiple lines) and using 4 spaces for indentation.

|

||||||

|

|

||||||

|

* Do not use single letter or cryptic names for variables (except iterator variables or the like). When in doubt, choose the more verbose option.

|

||||||

|

|

||||||

|

* For consistency, use ' instead of " for strings for all new code. Only use " when the string contains ' inside it. Exception: " is used for html attributes in Jinja templates.

|

||||||

|

|

||||||

|

* Don't leave trailing whitespaces at the end of lines. Configure your editor the way you need to avoid this from happening.

|

||||||

|

|

||||||

|

* Make commits highly descriptive, so that other people (and yourself in the future) know exactly why a change was made. The first line of the commit is a short summary. Add a blank line and then a more extensive summary. If it is a bug fix, this should include a description of what caused the bug and how this commit fixes it. There's a lot of knowledge you gather while solving a problem. Dump as much of it as possible into the commit for others and yourself to learn from. Mention the issue number (e.g. Fixes #23) in your commit if applicable. [Here](https://www.freecodecamp.org/news/writing-good-commit-messages-a-practical-guide/) are some useful guidelines.

|

||||||

|

|

||||||

|

* The same guidelines apply to commenting code. If a piece of code is not self-explanatory, add a comment explaining what it does and why it's there.

|

||||||

|

|

||||||

|

# Testing and releases

|

||||||

|

* This project uses pytest. To install pytest and any future dependencies needed for development, run pip3 on the requirements-dev.txt file. To run tests, run `python3 -m pytest` rather than just `pytest` because the former will make sure the toplevel directory is in Python's import search path.

|

||||||

|

|

||||||

|

* To build releases for Windows, run `python3 generate_release.py [intended python version here, without v infront]`. The required software (such as 7z, git) are listed in the `generate_release.py` file. For instance, wine is required if building on Linux. The build script will automatically download the embedded Python release to include. Use the latest release of Python 3.7.x so that Vista will be supported. See https://github.com/user234683/youtube-local/issues/6#issuecomment-672608388

|

||||||

|

|

||||||

|

# Overview of the software architecture

|

||||||

|

|

||||||

|

## server.py

|

||||||

|

* This is the entry point, and sets up the HTTP server that listens for incoming requests. It delegates the request to the appropriate "site_handler". For instance, `localhost:8080/youtube.com/...` goes to the `youtube` site handler, whereas `localhost:8080/ytimg.com/...` (the url for video thumbnails) goes to the site handler for just fetching static resources such as images from youtube.

|

||||||

|

|

||||||

|

* The reason for this architecture: the original design philosophy when I first conceived the project was that this would work for any site supported by youtube-dl, including Youtube, Vimeo, DailyMotion, etc. I've dropped this idea for now, though I might pick it up later. (youtube-dl is no longer used)

|

||||||

|

|

||||||

|

* This file uses the raw [WSGI request](https://www.python.org/dev/peps/pep-3333/) format. The WSGI format is a Python standard for how HTTP servers (I use the stock server provided by gevent) should call HTTP applications. So that's why the file contains stuff like `env['REQUEST_METHOD']`.

|

||||||

|

|

||||||

|

|

||||||

|

## Flask and Gevent

|

||||||

|

* The `youtube` handler in server.py then delegates the request to the Flask yt_app object, which the rest of the project uses. [Flask](https://flask.palletsprojects.com/en/1.1.x/) is a web application framework that makes handling requests easier than accessing the raw WSGI requests. Flask (Werkzeug specifically) figures out which function to call for a particular url. Each request handling function is registered into Flask's routing table by using function annotations above it. The request handling functions are always at the bottom of the file for a particular youtube page (channel, watch, playlist, etc.), and they're where you want to look to see how the response gets constructed for a particular url. Miscellaneous request handlers that don't belong anywhere else are located in `__init__.py`, which is where the `yt_app` object is instantiated.

|

||||||

|

|

||||||

|

* The actual html for youtube-local is generated using Jinja templates. Jinja lets you embed a Python-like language inside html files so you can use constructs such as for loops to construct the html for a list of 30 videos given a dictionary with information for those videos. Jinja is included as part of Flask. It has some annoying differences from Python in a lot of details, so check the [docs here](https://jinja.palletsprojects.com/en/2.11.x/) when you use it. The request handling functions will pass the information that has been scraped from Youtube into these templates for the final result.

|

||||||

|

* The project uses the gevent library for parallelism (such as for launching requests in parallel), as opposed to using the async keyword.

|

||||||

|

|

||||||

|

## util.py

|

||||||

|

* util.py is a grab-bag of miscellaneous things; admittedly I need to get around to refactoring it. The biggest thing it has is the `fetch_url` function which is what I use for sending out requests for Youtube. The Tor routing is managed here. `fetch_url` will raise an a `FetchError` exception if the request fails. The parameter `debug_name` in `fetch_url` is the filename that the response from Youtube will be saved to if the hidden debugging option is enabled in settings.txt. So if there's a bug when Youtube changes something, you can check the response from Youtube from that file.

|

||||||

|

|

||||||

|

## Data extraction - protobuf, polymer, and yt_data_extract

|

||||||

|

* proto.py is used for generating what are called ctokens needed when making requests to Youtube. These ctokens use Google's [protobuf](https://developers.google.com/protocol-buffers) format. Figuring out how to generate these in new instances requires some reverse engineering. I have a messy python file I use to make this convenient which you can find under ./youtube/proto_debug.py

|

||||||

|

|

||||||

|

* The responses from Youtube are in a JSON format called polymer (polymer is the name of the 2017-present Youtube layout). The JSON consists of a bunch of nested dictionaries which basically specify the layout of the page via objects called renderers. A renderer represents an object on a page in a similar way to html tags; the renders often contain renders inside them. The Javascript on Youtube's page translates this JSON to HTML. Example: `compactVideoRenderer` represents a video item in you can click on such as in the related videos (so these are called "items" in the codebase). This JSON is very messy. You'll need a JSON prettifier or something that gives you a tree view in order to study it.

|

||||||

|

|

||||||

|

* `yt_data_extract` is a module that parses this this raw JSON page layout and extracts the useful information from it into a standardized dictionary. So for instance, it can take the raw JSON response from the watch page and return a dictionary containing keys such as `title`, `description`,`related_videos (list)`, `likes`, etc. This module contains a lot of abstractions designed to make parsing the polymer format easier and more resilient towards changes from Youtube. (A lot of Youtube extractors just traverse the JSON tree like `response[1]['response']['continuation']['gridContinuationRenderer']['items']...` but this tends to break frequently when Youtube changes things.) If it fails to extract a piece of data, such as the like count, it will place `None` in that entry. Exceptions are not used in this module. So it uses functions which return None if there's a failure, such as `deep_get(response, 1, 'response', 'continuation', 'gridContinuationRenderer', 'items')` which returns None if any of those keys aren't present. The general purpose abstractions are located in `common.py`, while the functions for parsing specific responses (watch page, playlist, channel, etc.) are located in `watch_extraction.py` and `everything_else.py`.

|

||||||

|

|

||||||

|

* Most of these abstractions are self-explanatory, except for `extract_items_from_renderer`, a function that performs a recursive search for the specified renderers. You give it a renderer which contains nested renderers, and a set of the renderer types you want to extract (by default, these are the video/playlist/channel preview items). It will search through the nested renderers and gather the specified items, in addition to the continuation token (ctoken) for the last list of items it finds if there is one. Using this function achieves resiliency against Youtube rearranging the items into a different hierarchy.

|

||||||

|

|

||||||

|

* The `extract_items` function is similar but works on the response object, automatically finding the appropriate renderer to call `extract_items_from_renderer` on.

|

||||||

|

|

||||||

|

|

||||||

|

## Other

|

||||||

|

* subscriptions.py uses SQLite to store data.

|

||||||

|

|

||||||

|

* Hidden settings only relevant to developers (such as for debugging) are not displayed on the settings page. They can be found in the settings.txt file.

|

||||||

|

|

||||||

|

* Since I can't anticipate the things that will trip up beginners to the codebase, if you spend awhile figuring something out, go ahead and make a pull request adding a brief description of your findings to this document to help other beginners.

|

||||||

|

|

||||||

|

## Development tips

|

||||||

|

* When developing functionality to interact with Youtube in new ways, you'll want to use the network tab in your browser's devtools to inspect which requests get made under normal usage of Youtube. You'll also want a tool you can use to construct custom requests and specify headers to reverse engineer the request format. I use the [HeaderTool](https://github.com/loreii/HeaderTool) extension in Firefox, but there's probably a more streamlined program out there.

|

||||||

|

|

||||||

|

* You'll want to have a utility or IDE that can perform full text search on a repository, since this is crucial for navigating unfamiliar codebases to figure out where certain strings appear or where things get defined.

|

||||||

|

|

||||||

|

* If you're confused what the purpose of a particular line/section of code is, you can use the "git blame" feature on github (click the line number and then the three dots) to view the commit where the line of code was created and check the commit message. This will give you an idea of how it was put together.

|

||||||

619

LICENSE

Normal file

619

LICENSE

Normal file

@@ -0,0 +1,619 @@

|

|||||||

|

GNU AFFERO GENERAL PUBLIC LICENSE

|

||||||

|

Version 3, 19 November 2007

|

||||||

|

|

||||||

|

Copyright (C) 2007 Free Software Foundation, Inc. <https://fsf.org/>

|

||||||

|

Everyone is permitted to copy and distribute verbatim copies

|

||||||

|

of this license document, but changing it is not allowed.

|

||||||

|

|

||||||

|

Preamble

|

||||||

|

|

||||||

|

The GNU Affero General Public License is a free, copyleft license for

|

||||||

|

software and other kinds of works, specifically designed to ensure

|

||||||

|

cooperation with the community in the case of network server software.

|

||||||

|

|

||||||

|

The licenses for most software and other practical works are designed

|

||||||

|

to take away your freedom to share and change the works. By contrast,

|

||||||

|

our General Public Licenses are intended to guarantee your freedom to

|

||||||

|

share and change all versions of a program--to make sure it remains free

|

||||||

|

software for all its users.

|

||||||

|

|

||||||

|

When we speak of free software, we are referring to freedom, not

|

||||||

|

price. Our General Public Licenses are designed to make sure that you

|

||||||

|

have the freedom to distribute copies of free software (and charge for

|

||||||

|

them if you wish), that you receive source code or can get it if you

|

||||||

|

want it, that you can change the software or use pieces of it in new

|

||||||

|

free programs, and that you know you can do these things.

|

||||||

|

|

||||||

|

Developers that use our General Public Licenses protect your rights

|

||||||

|

with two steps: (1) assert copyright on the software, and (2) offer

|

||||||

|

you this License which gives you legal permission to copy, distribute

|

||||||

|

and/or modify the software.

|

||||||

|

|

||||||

|

A secondary benefit of defending all users' freedom is that

|

||||||

|

improvements made in alternate versions of the program, if they

|

||||||

|

receive widespread use, become available for other developers to

|

||||||

|

incorporate. Many developers of free software are heartened and

|

||||||

|

encouraged by the resulting cooperation. However, in the case of

|

||||||

|

software used on network servers, this result may fail to come about.

|

||||||

|

The GNU General Public License permits making a modified version and

|

||||||

|

letting the public access it on a server without ever releasing its

|

||||||

|

source code to the public.

|

||||||

|

|

||||||

|

The GNU Affero General Public License is designed specifically to

|

||||||

|

ensure that, in such cases, the modified source code becomes available

|

||||||

|

to the community. It requires the operator of a network server to

|

||||||

|

provide the source code of the modified version running there to the

|

||||||

|

users of that server. Therefore, public use of a modified version, on

|

||||||

|

a publicly accessible server, gives the public access to the source

|

||||||

|

code of the modified version.

|

||||||

|

|

||||||

|

An older license, called the Affero General Public License and

|

||||||

|

published by Affero, was designed to accomplish similar goals. This is

|

||||||

|

a different license, not a version of the Affero GPL, but Affero has

|

||||||

|

released a new version of the Affero GPL which permits relicensing under

|

||||||

|

this license.

|

||||||

|

|

||||||

|

The precise terms and conditions for copying, distribution and

|

||||||

|

modification follow.

|

||||||

|

|

||||||

|

TERMS AND CONDITIONS

|

||||||

|

|

||||||

|

0. Definitions.

|

||||||

|

|

||||||

|

"This License" refers to version 3 of the GNU Affero General Public License.

|

||||||

|

|

||||||

|

"Copyright" also means copyright-like laws that apply to other kinds of

|

||||||

|

works, such as semiconductor masks.

|

||||||

|

|

||||||

|

"The Program" refers to any copyrightable work licensed under this

|

||||||

|

License. Each licensee is addressed as "you". "Licensees" and

|

||||||

|

"recipients" may be individuals or organizations.

|

||||||

|

|

||||||

|

To "modify" a work means to copy from or adapt all or part of the work

|

||||||

|

in a fashion requiring copyright permission, other than the making of an

|

||||||

|

exact copy. The resulting work is called a "modified version" of the

|

||||||

|

earlier work or a work "based on" the earlier work.

|

||||||

|

|

||||||

|

A "covered work" means either the unmodified Program or a work based

|

||||||

|

on the Program.

|

||||||

|

|

||||||

|

To "propagate" a work means to do anything with it that, without

|

||||||

|

permission, would make you directly or secondarily liable for

|

||||||

|

infringement under applicable copyright law, except executing it on a

|

||||||

|

computer or modifying a private copy. Propagation includes copying,

|

||||||

|

distribution (with or without modification), making available to the

|

||||||

|

public, and in some countries other activities as well.

|

||||||

|

|

||||||

|

To "convey" a work means any kind of propagation that enables other

|

||||||

|

parties to make or receive copies. Mere interaction with a user through

|

||||||

|

a computer network, with no transfer of a copy, is not conveying.

|

||||||

|

|

||||||

|

An interactive user interface displays "Appropriate Legal Notices"

|

||||||

|

to the extent that it includes a convenient and prominently visible

|

||||||

|

feature that (1) displays an appropriate copyright notice, and (2)

|

||||||

|

tells the user that there is no warranty for the work (except to the

|

||||||

|

extent that warranties are provided), that licensees may convey the

|

||||||

|

work under this License, and how to view a copy of this License. If

|

||||||

|

the interface presents a list of user commands or options, such as a

|

||||||

|

menu, a prominent item in the list meets this criterion.

|

||||||

|

|

||||||

|

1. Source Code.

|

||||||

|

|

||||||

|

The "source code" for a work means the preferred form of the work

|

||||||

|

for making modifications to it. "Object code" means any non-source

|

||||||

|

form of a work.

|

||||||

|

|

||||||

|

A "Standard Interface" means an interface that either is an official

|

||||||

|

standard defined by a recognized standards body, or, in the case of

|

||||||

|

interfaces specified for a particular programming language, one that

|

||||||

|

is widely used among developers working in that language.

|

||||||

|

|

||||||

|

The "System Libraries" of an executable work include anything, other

|

||||||

|

than the work as a whole, that (a) is included in the normal form of

|

||||||

|

packaging a Major Component, but which is not part of that Major

|

||||||

|

Component, and (b) serves only to enable use of the work with that

|

||||||

|

Major Component, or to implement a Standard Interface for which an

|

||||||

|

implementation is available to the public in source code form. A

|

||||||

|

"Major Component", in this context, means a major essential component

|

||||||

|

(kernel, window system, and so on) of the specific operating system

|

||||||

|

(if any) on which the executable work runs, or a compiler used to

|

||||||

|

produce the work, or an object code interpreter used to run it.

|

||||||

|

|

||||||

|

The "Corresponding Source" for a work in object code form means all

|

||||||

|

the source code needed to generate, install, and (for an executable

|

||||||

|

work) run the object code and to modify the work, including scripts to

|

||||||

|

control those activities. However, it does not include the work's

|

||||||

|

System Libraries, or general-purpose tools or generally available free

|

||||||

|

programs which are used unmodified in performing those activities but

|

||||||

|

which are not part of the work. For example, Corresponding Source

|

||||||

|

includes interface definition files associated with source files for

|

||||||

|

the work, and the source code for shared libraries and dynamically

|

||||||

|

linked subprograms that the work is specifically designed to require,

|

||||||

|

such as by intimate data communication or control flow between those

|

||||||

|

subprograms and other parts of the work.

|

||||||

|

|

||||||

|

The Corresponding Source need not include anything that users

|

||||||

|

can regenerate automatically from other parts of the Corresponding

|

||||||

|

Source.

|

||||||

|

|

||||||

|

The Corresponding Source for a work in source code form is that

|

||||||

|

same work.

|

||||||

|

|

||||||

|

2. Basic Permissions.

|

||||||

|

|

||||||

|

All rights granted under this License are granted for the term of

|

||||||

|

copyright on the Program, and are irrevocable provided the stated

|

||||||

|

conditions are met. This License explicitly affirms your unlimited

|

||||||

|

permission to run the unmodified Program. The output from running a

|

||||||

|

covered work is covered by this License only if the output, given its

|

||||||

|

content, constitutes a covered work. This License acknowledges your

|

||||||

|

rights of fair use or other equivalent, as provided by copyright law.

|

||||||

|

|

||||||

|

You may make, run and propagate covered works that you do not

|

||||||

|

convey, without conditions so long as your license otherwise remains

|

||||||

|

in force. You may convey covered works to others for the sole purpose

|

||||||

|

of having them make modifications exclusively for you, or provide you

|

||||||

|

with facilities for running those works, provided that you comply with

|

||||||

|

the terms of this License in conveying all material for which you do

|

||||||

|

not control copyright. Those thus making or running the covered works

|

||||||

|

for you must do so exclusively on your behalf, under your direction

|

||||||

|

and control, on terms that prohibit them from making any copies of

|

||||||

|

your copyrighted material outside their relationship with you.

|

||||||

|

|

||||||

|

Conveying under any other circumstances is permitted solely under

|

||||||

|

the conditions stated below. Sublicensing is not allowed; section 10

|

||||||

|

makes it unnecessary.

|

||||||

|

|

||||||

|

3. Protecting Users' Legal Rights From Anti-Circumvention Law.

|

||||||

|

|

||||||

|

No covered work shall be deemed part of an effective technological

|

||||||

|

measure under any applicable law fulfilling obligations under article

|

||||||

|

11 of the WIPO copyright treaty adopted on 20 December 1996, or

|

||||||

|

similar laws prohibiting or restricting circumvention of such

|

||||||

|

measures.

|

||||||

|

|

||||||

|

When you convey a covered work, you waive any legal power to forbid

|

||||||

|

circumvention of technological measures to the extent such circumvention

|

||||||

|

is effected by exercising rights under this License with respect to

|

||||||

|

the covered work, and you disclaim any intention to limit operation or

|

||||||

|

modification of the work as a means of enforcing, against the work's

|

||||||

|

users, your or third parties' legal rights to forbid circumvention of

|

||||||

|

technological measures.

|

||||||

|

|

||||||

|

4. Conveying Verbatim Copies.

|

||||||

|

|

||||||

|

You may convey verbatim copies of the Program's source code as you

|

||||||

|

receive it, in any medium, provided that you conspicuously and

|

||||||

|

appropriately publish on each copy an appropriate copyright notice;

|

||||||

|

keep intact all notices stating that this License and any

|

||||||

|

non-permissive terms added in accord with section 7 apply to the code;

|

||||||

|

keep intact all notices of the absence of any warranty; and give all

|

||||||

|

recipients a copy of this License along with the Program.

|

||||||

|

|

||||||

|

You may charge any price or no price for each copy that you convey,

|

||||||

|

and you may offer support or warranty protection for a fee.

|

||||||

|

|

||||||

|

5. Conveying Modified Source Versions.

|

||||||

|

|

||||||

|

You may convey a work based on the Program, or the modifications to

|

||||||

|

produce it from the Program, in the form of source code under the

|

||||||

|

terms of section 4, provided that you also meet all of these conditions:

|

||||||

|

|

||||||

|

a) The work must carry prominent notices stating that you modified

|

||||||

|

it, and giving a relevant date.

|

||||||

|

|

||||||

|

b) The work must carry prominent notices stating that it is

|

||||||

|

released under this License and any conditions added under section

|

||||||

|

7. This requirement modifies the requirement in section 4 to

|

||||||

|

"keep intact all notices".

|

||||||

|

|

||||||

|

c) You must license the entire work, as a whole, under this

|

||||||

|

License to anyone who comes into possession of a copy. This

|

||||||

|

License will therefore apply, along with any applicable section 7

|

||||||

|

additional terms, to the whole of the work, and all its parts,

|

||||||

|

regardless of how they are packaged. This License gives no

|

||||||

|

permission to license the work in any other way, but it does not

|

||||||

|

invalidate such permission if you have separately received it.

|

||||||

|

|

||||||

|

d) If the work has interactive user interfaces, each must display

|

||||||

|

Appropriate Legal Notices; however, if the Program has interactive

|

||||||

|

interfaces that do not display Appropriate Legal Notices, your

|

||||||

|

work need not make them do so.

|

||||||

|

|

||||||

|

A compilation of a covered work with other separate and independent

|

||||||

|

works, which are not by their nature extensions of the covered work,

|

||||||

|

and which are not combined with it such as to form a larger program,

|

||||||

|

in or on a volume of a storage or distribution medium, is called an

|

||||||

|

"aggregate" if the compilation and its resulting copyright are not

|

||||||

|

used to limit the access or legal rights of the compilation's users

|

||||||

|

beyond what the individual works permit. Inclusion of a covered work

|

||||||

|

in an aggregate does not cause this License to apply to the other

|

||||||

|

parts of the aggregate.

|

||||||

|

|

||||||

|

6. Conveying Non-Source Forms.

|

||||||

|

|

||||||

|

You may convey a covered work in object code form under the terms

|

||||||

|

of sections 4 and 5, provided that you also convey the

|

||||||

|

machine-readable Corresponding Source under the terms of this License,

|

||||||

|

in one of these ways:

|

||||||

|

|

||||||

|

a) Convey the object code in, or embodied in, a physical product

|

||||||

|

(including a physical distribution medium), accompanied by the

|

||||||

|

Corresponding Source fixed on a durable physical medium

|

||||||

|

customarily used for software interchange.

|

||||||

|

|

||||||

|

b) Convey the object code in, or embodied in, a physical product

|

||||||

|

(including a physical distribution medium), accompanied by a

|

||||||

|

written offer, valid for at least three years and valid for as

|

||||||

|

long as you offer spare parts or customer support for that product

|

||||||

|

model, to give anyone who possesses the object code either (1) a

|

||||||

|

copy of the Corresponding Source for all the software in the

|

||||||

|

product that is covered by this License, on a durable physical

|

||||||

|

medium customarily used for software interchange, for a price no

|

||||||

|

more than your reasonable cost of physically performing this

|

||||||

|

conveying of source, or (2) access to copy the

|

||||||

|

Corresponding Source from a network server at no charge.

|

||||||

|

|

||||||

|

c) Convey individual copies of the object code with a copy of the

|

||||||

|

written offer to provide the Corresponding Source. This

|

||||||

|

alternative is allowed only occasionally and noncommercially, and

|

||||||

|

only if you received the object code with such an offer, in accord

|

||||||

|

with subsection 6b.

|

||||||

|

|

||||||

|

d) Convey the object code by offering access from a designated

|

||||||

|

place (gratis or for a charge), and offer equivalent access to the

|

||||||

|

Corresponding Source in the same way through the same place at no

|

||||||

|

further charge. You need not require recipients to copy the

|

||||||

|

Corresponding Source along with the object code. If the place to

|

||||||

|

copy the object code is a network server, the Corresponding Source

|

||||||

|

may be on a different server (operated by you or a third party)

|

||||||

|

that supports equivalent copying facilities, provided you maintain

|

||||||

|

clear directions next to the object code saying where to find the

|

||||||

|

Corresponding Source. Regardless of what server hosts the

|

||||||

|

Corresponding Source, you remain obligated to ensure that it is

|

||||||

|

available for as long as needed to satisfy these requirements.

|

||||||

|

|

||||||

|

e) Convey the object code using peer-to-peer transmission, provided

|

||||||

|

you inform other peers where the object code and Corresponding

|

||||||

|

Source of the work are being offered to the general public at no

|

||||||

|

charge under subsection 6d.

|

||||||

|

|

||||||

|

A separable portion of the object code, whose source code is excluded

|

||||||

|

from the Corresponding Source as a System Library, need not be

|

||||||

|

included in conveying the object code work.

|

||||||

|

|

||||||

|

A "User Product" is either (1) a "consumer product", which means any

|

||||||

|

tangible personal property which is normally used for personal, family,

|

||||||

|

or household purposes, or (2) anything designed or sold for incorporation

|

||||||

|

into a dwelling. In determining whether a product is a consumer product,

|

||||||

|

doubtful cases shall be resolved in favor of coverage. For a particular

|

||||||

|

product received by a particular user, "normally used" refers to a

|

||||||

|

typical or common use of that class of product, regardless of the status

|

||||||

|

of the particular user or of the way in which the particular user

|

||||||

|

actually uses, or expects or is expected to use, the product. A product

|

||||||

|

is a consumer product regardless of whether the product has substantial

|

||||||

|

commercial, industrial or non-consumer uses, unless such uses represent

|

||||||

|

the only significant mode of use of the product.

|

||||||

|

|

||||||

|

"Installation Information" for a User Product means any methods,

|

||||||

|

procedures, authorization keys, or other information required to install

|

||||||

|

and execute modified versions of a covered work in that User Product from

|

||||||

|

a modified version of its Corresponding Source. The information must

|

||||||

|

suffice to ensure that the continued functioning of the modified object

|

||||||

|

code is in no case prevented or interfered with solely because

|

||||||

|

modification has been made.

|

||||||

|

|

||||||

|

If you convey an object code work under this section in, or with, or

|

||||||

|

specifically for use in, a User Product, and the conveying occurs as

|

||||||

|

part of a transaction in which the right of possession and use of the

|

||||||

|

User Product is transferred to the recipient in perpetuity or for a

|

||||||

|

fixed term (regardless of how the transaction is characterized), the

|

||||||

|

Corresponding Source conveyed under this section must be accompanied

|

||||||

|

by the Installation Information. But this requirement does not apply

|

||||||

|

if neither you nor any third party retains the ability to install

|

||||||

|

modified object code on the User Product (for example, the work has

|

||||||

|

been installed in ROM).

|

||||||

|

|

||||||

|

The requirement to provide Installation Information does not include a

|

||||||

|

requirement to continue to provide support service, warranty, or updates

|

||||||

|

for a work that has been modified or installed by the recipient, or for

|

||||||

|

the User Product in which it has been modified or installed. Access to a

|

||||||

|

network may be denied when the modification itself materially and

|

||||||

|

adversely affects the operation of the network or violates the rules and

|

||||||

|

protocols for communication across the network.

|

||||||

|

|

||||||

|

Corresponding Source conveyed, and Installation Information provided,

|

||||||

|

in accord with this section must be in a format that is publicly

|

||||||

|

documented (and with an implementation available to the public in

|

||||||

|

source code form), and must require no special password or key for

|

||||||

|

unpacking, reading or copying.

|

||||||

|

|

||||||

|

7. Additional Terms.

|

||||||

|

|

||||||

|

"Additional permissions" are terms that supplement the terms of this

|

||||||

|

License by making exceptions from one or more of its conditions.

|

||||||

|

Additional permissions that are applicable to the entire Program shall

|

||||||

|

be treated as though they were included in this License, to the extent

|

||||||

|

that they are valid under applicable law. If additional permissions

|

||||||

|

apply only to part of the Program, that part may be used separately

|

||||||

|

under those permissions, but the entire Program remains governed by

|

||||||

|

this License without regard to the additional permissions.

|

||||||

|

|

||||||

|

When you convey a copy of a covered work, you may at your option

|

||||||

|

remove any additional permissions from that copy, or from any part of

|

||||||

|

it. (Additional permissions may be written to require their own

|

||||||

|

removal in certain cases when you modify the work.) You may place

|

||||||

|

additional permissions on material, added by you to a covered work,

|

||||||

|

for which you have or can give appropriate copyright permission.

|

||||||

|

|

||||||

|

Notwithstanding any other provision of this License, for material you

|

||||||

|

add to a covered work, you may (if authorized by the copyright holders of

|

||||||

|

that material) supplement the terms of this License with terms:

|

||||||

|

|

||||||

|

a) Disclaiming warranty or limiting liability differently from the

|

||||||

|

terms of sections 15 and 16 of this License; or

|

||||||

|

|

||||||

|

b) Requiring preservation of specified reasonable legal notices or

|

||||||

|

author attributions in that material or in the Appropriate Legal

|

||||||

|

Notices displayed by works containing it; or

|

||||||

|

|

||||||

|

c) Prohibiting misrepresentation of the origin of that material, or

|

||||||

|

requiring that modified versions of such material be marked in

|

||||||

|

reasonable ways as different from the original version; or

|

||||||

|

|

||||||

|

d) Limiting the use for publicity purposes of names of licensors or

|

||||||

|

authors of the material; or

|

||||||

|

|

||||||

|

e) Declining to grant rights under trademark law for use of some

|

||||||

|

trade names, trademarks, or service marks; or

|

||||||

|

|

||||||

|

f) Requiring indemnification of licensors and authors of that

|

||||||

|

material by anyone who conveys the material (or modified versions of

|

||||||

|

it) with contractual assumptions of liability to the recipient, for

|

||||||

|

any liability that these contractual assumptions directly impose on

|

||||||

|

those licensors and authors.

|

||||||

|

|

||||||

|

All other non-permissive additional terms are considered "further

|

||||||

|

restrictions" within the meaning of section 10. If the Program as you

|

||||||

|

received it, or any part of it, contains a notice stating that it is

|

||||||

|

governed by this License along with a term that is a further

|

||||||

|

restriction, you may remove that term. If a license document contains

|

||||||

|

a further restriction but permits relicensing or conveying under this

|

||||||

|

License, you may add to a covered work material governed by the terms

|

||||||

|

of that license document, provided that the further restriction does

|

||||||

|

not survive such relicensing or conveying.

|

||||||

|

|

||||||

|

If you add terms to a covered work in accord with this section, you

|

||||||

|

must place, in the relevant source files, a statement of the

|

||||||

|

additional terms that apply to those files, or a notice indicating

|

||||||

|

where to find the applicable terms.

|

||||||

|

|

||||||

|

Additional terms, permissive or non-permissive, may be stated in the

|

||||||

|

form of a separately written license, or stated as exceptions;

|

||||||

|

the above requirements apply either way.

|

||||||

|

|

||||||

|

8. Termination.

|

||||||

|

|

||||||

|

You may not propagate or modify a covered work except as expressly

|

||||||

|

provided under this License. Any attempt otherwise to propagate or

|

||||||

|

modify it is void, and will automatically terminate your rights under

|

||||||

|

this License (including any patent licenses granted under the third

|

||||||

|

paragraph of section 11).

|

||||||

|

|

||||||

|

However, if you cease all violation of this License, then your

|

||||||

|

license from a particular copyright holder is reinstated (a)

|

||||||

|

provisionally, unless and until the copyright holder explicitly and

|

||||||

|

finally terminates your license, and (b) permanently, if the copyright

|

||||||

|

holder fails to notify you of the violation by some reasonable means

|

||||||

|

prior to 60 days after the cessation.

|

||||||

|

|

||||||

|

Moreover, your license from a particular copyright holder is

|

||||||

|

reinstated permanently if the copyright holder notifies you of the

|

||||||

|

violation by some reasonable means, this is the first time you have

|

||||||

|

received notice of violation of this License (for any work) from that

|

||||||

|

copyright holder, and you cure the violation prior to 30 days after

|

||||||

|

your receipt of the notice.

|

||||||

|

|

||||||

|

Termination of your rights under this section does not terminate the

|

||||||

|

licenses of parties who have received copies or rights from you under

|

||||||

|

this License. If your rights have been terminated and not permanently

|

||||||

|

reinstated, you do not qualify to receive new licenses for the same

|

||||||

|

material under section 10.

|

||||||

|

|

||||||

|

9. Acceptance Not Required for Having Copies.

|

||||||

|

|

||||||

|

You are not required to accept this License in order to receive or

|

||||||

|

run a copy of the Program. Ancillary propagation of a covered work

|

||||||

|

occurring solely as a consequence of using peer-to-peer transmission

|

||||||

|

to receive a copy likewise does not require acceptance. However,

|

||||||

|

nothing other than this License grants you permission to propagate or

|

||||||

|

modify any covered work. These actions infringe copyright if you do

|

||||||

|

not accept this License. Therefore, by modifying or propagating a

|

||||||

|

covered work, you indicate your acceptance of this License to do so.

|

||||||

|

|

||||||

|

10. Automatic Licensing of Downstream Recipients.

|

||||||

|

|

||||||

|

Each time you convey a covered work, the recipient automatically

|

||||||

|

receives a license from the original licensors, to run, modify and

|

||||||

|

propagate that work, subject to this License. You are not responsible

|

||||||

|

for enforcing compliance by third parties with this License.

|

||||||

|

|

||||||

|

An "entity transaction" is a transaction transferring control of an

|

||||||

|

organization, or substantially all assets of one, or subdividing an

|

||||||

|

organization, or merging organizations. If propagation of a covered

|

||||||

|

work results from an entity transaction, each party to that

|

||||||

|

transaction who receives a copy of the work also receives whatever

|

||||||

|

licenses to the work the party's predecessor in interest had or could

|

||||||

|

give under the previous paragraph, plus a right to possession of the

|

||||||

|

Corresponding Source of the work from the predecessor in interest, if

|

||||||

|

the predecessor has it or can get it with reasonable efforts.

|

||||||

|

|

||||||

|

You may not impose any further restrictions on the exercise of the

|

||||||

|

rights granted or affirmed under this License. For example, you may

|

||||||

|

not impose a license fee, royalty, or other charge for exercise of

|

||||||

|

rights granted under this License, and you may not initiate litigation

|

||||||

|

(including a cross-claim or counterclaim in a lawsuit) alleging that

|

||||||

|

any patent claim is infringed by making, using, selling, offering for

|

||||||

|

sale, or importing the Program or any portion of it.

|

||||||

|

|

||||||

|

11. Patents.

|

||||||

|

|

||||||

|

A "contributor" is a copyright holder who authorizes use under this

|

||||||

|

License of the Program or a work on which the Program is based. The

|

||||||

|

work thus licensed is called the contributor's "contributor version".

|

||||||

|

|

||||||

|

A contributor's "essential patent claims" are all patent claims

|

||||||

|

owned or controlled by the contributor, whether already acquired or

|

||||||

|

hereafter acquired, that would be infringed by some manner, permitted

|

||||||

|

by this License, of making, using, or selling its contributor version,

|

||||||

|

but do not include claims that would be infringed only as a

|

||||||

|

consequence of further modification of the contributor version. For

|

||||||

|

purposes of this definition, "control" includes the right to grant

|

||||||

|

patent sublicenses in a manner consistent with the requirements of

|

||||||

|

this License.

|

||||||

|

|

||||||

|

Each contributor grants you a non-exclusive, worldwide, royalty-free

|

||||||

|

patent license under the contributor's essential patent claims, to

|

||||||

|

make, use, sell, offer for sale, import and otherwise run, modify and

|

||||||

|

propagate the contents of its contributor version.

|

||||||

|

|

||||||

|

In the following three paragraphs, a "patent license" is any express

|

||||||

|

agreement or commitment, however denominated, not to enforce a patent

|

||||||

|

(such as an express permission to practice a patent or covenant not to

|

||||||

|

sue for patent infringement). To "grant" such a patent license to a

|

||||||

|

party means to make such an agreement or commitment not to enforce a

|

||||||

|

patent against the party.

|

||||||

|

|

||||||

|

If you convey a covered work, knowingly relying on a patent license,

|

||||||

|

and the Corresponding Source of the work is not available for anyone

|

||||||

|

to copy, free of charge and under the terms of this License, through a

|

||||||

|

publicly available network server or other readily accessible means,

|

||||||

|

then you must either (1) cause the Corresponding Source to be so

|

||||||

|

available, or (2) arrange to deprive yourself of the benefit of the

|

||||||

|

patent license for this particular work, or (3) arrange, in a manner

|

||||||

|

consistent with the requirements of this License, to extend the patent

|

||||||

|

license to downstream recipients. "Knowingly relying" means you have

|

||||||

|

actual knowledge that, but for the patent license, your conveying the

|

||||||

|

covered work in a country, or your recipient's use of the covered work

|

||||||

|

in a country, would infringe one or more identifiable patents in that

|

||||||

|

country that you have reason to believe are valid.

|

||||||

|

|

||||||

|

If, pursuant to or in connection with a single transaction or

|

||||||

|

arrangement, you convey, or propagate by procuring conveyance of, a

|

||||||

|

covered work, and grant a patent license to some of the parties

|

||||||

|

receiving the covered work authorizing them to use, propagate, modify

|

||||||

|

or convey a specific copy of the covered work, then the patent license

|

||||||

|

you grant is automatically extended to all recipients of the covered

|

||||||

|

work and works based on it.

|

||||||

|

|

||||||

|

A patent license is "discriminatory" if it does not include within

|

||||||

|

the scope of its coverage, prohibits the exercise of, or is

|

||||||

|

conditioned on the non-exercise of one or more of the rights that are

|

||||||

|

specifically granted under this License. You may not convey a covered

|

||||||

|

work if you are a party to an arrangement with a third party that is

|

||||||

|

in the business of distributing software, under which you make payment

|

||||||

|

to the third party based on the extent of your activity of conveying

|

||||||

|

the work, and under which the third party grants, to any of the

|

||||||

|

parties who would receive the covered work from you, a discriminatory

|

||||||

|

patent license (a) in connection with copies of the covered work

|

||||||

|

conveyed by you (or copies made from those copies), or (b) primarily

|

||||||

|

for and in connection with specific products or compilations that

|

||||||

|

contain the covered work, unless you entered into that arrangement,

|

||||||

|

or that patent license was granted, prior to 28 March 2007.

|

||||||

|

|

||||||

|

Nothing in this License shall be construed as excluding or limiting

|

||||||

|

any implied license or other defenses to infringement that may

|

||||||

|

otherwise be available to you under applicable patent law.

|

||||||

|

|

||||||

|

12. No Surrender of Others' Freedom.

|

||||||

|

|

||||||

|

If conditions are imposed on you (whether by court order, agreement or

|

||||||

|

otherwise) that contradict the conditions of this License, they do not

|

||||||

|

excuse you from the conditions of this License. If you cannot convey a

|

||||||

|

covered work so as to satisfy simultaneously your obligations under this

|

||||||

|

License and any other pertinent obligations, then as a consequence you may

|

||||||

|

not convey it at all. For example, if you agree to terms that obligate you

|

||||||

|

to collect a royalty for further conveying from those to whom you convey

|

||||||

|

the Program, the only way you could satisfy both those terms and this

|

||||||

|

License would be to refrain entirely from conveying the Program.

|

||||||

|

|

||||||

|

13. Remote Network Interaction; Use with the GNU General Public License.

|

||||||

|

|

||||||

|

Notwithstanding any other provision of this License, if you modify the

|

||||||

|

Program, your modified version must prominently offer all users

|

||||||

|

interacting with it remotely through a computer network (if your version

|

||||||

|

supports such interaction) an opportunity to receive the Corresponding

|

||||||

|

Source of your version by providing access to the Corresponding Source

|

||||||

|

from a network server at no charge, through some standard or customary

|

||||||

|

means of facilitating copying of software. This Corresponding Source

|

||||||

|

shall include the Corresponding Source for any work covered by version 3

|

||||||

|

of the GNU General Public License that is incorporated pursuant to the

|

||||||

|

following paragraph.

|

||||||

|

|

||||||

|

Notwithstanding any other provision of this License, you have

|

||||||

|

permission to link or combine any covered work with a work licensed

|

||||||

|

under version 3 of the GNU General Public License into a single

|

||||||

|

combined work, and to convey the resulting work. The terms of this

|

||||||

|

License will continue to apply to the part which is the covered work,

|

||||||

|

but the work with which it is combined will remain governed by version

|

||||||

|

3 of the GNU General Public License.

|

||||||

|

|

||||||

|

14. Revised Versions of this License.

|

||||||

|

|

||||||

|

The Free Software Foundation may publish revised and/or new versions of

|

||||||

|

the GNU Affero General Public License from time to time. Such new versions

|

||||||

|

will be similar in spirit to the present version, but may differ in detail to

|

||||||

|

address new problems or concerns.

|

||||||

|

|

||||||

|

Each version is given a distinguishing version number. If the

|

||||||

|

Program specifies that a certain numbered version of the GNU Affero General

|

||||||

|

Public License "or any later version" applies to it, you have the

|

||||||

|

option of following the terms and conditions either of that numbered

|

||||||

|

version or of any later version published by the Free Software

|

||||||

|

Foundation. If the Program does not specify a version number of the

|

||||||

|

GNU Affero General Public License, you may choose any version ever published

|

||||||

|

by the Free Software Foundation.

|

||||||

|

|

||||||

|

If the Program specifies that a proxy can decide which future

|

||||||

|

versions of the GNU Affero General Public License can be used, that proxy's

|

||||||

|

public statement of acceptance of a version permanently authorizes you

|

||||||

|

to choose that version for the Program.

|

||||||

|

|

||||||

|

Later license versions may give you additional or different

|

||||||

|

permissions. However, no additional obligations are imposed on any

|

||||||

|

author or copyright holder as a result of your choosing to follow a

|

||||||

|

later version.

|

||||||

|

|

||||||

|

15. Disclaimer of Warranty.

|

||||||

|

|

||||||

|

THERE IS NO WARRANTY FOR THE PROGRAM, TO THE EXTENT PERMITTED BY

|

||||||

|

APPLICABLE LAW. EXCEPT WHEN OTHERWISE STATED IN WRITING THE COPYRIGHT

|

||||||

|

HOLDERS AND/OR OTHER PARTIES PROVIDE THE PROGRAM "AS IS" WITHOUT WARRANTY

|

||||||

|

OF ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING, BUT NOT LIMITED TO,

|

||||||

|

THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR

|

||||||

|

PURPOSE. THE ENTIRE RISK AS TO THE QUALITY AND PERFORMANCE OF THE PROGRAM

|

||||||

|

IS WITH YOU. SHOULD THE PROGRAM PROVE DEFECTIVE, YOU ASSUME THE COST OF

|

||||||

|

ALL NECESSARY SERVICING, REPAIR OR CORRECTION.

|

||||||

|

|

||||||

|

16. Limitation of Liability.

|

||||||

|

|

||||||

|

IN NO EVENT UNLESS REQUIRED BY APPLICABLE LAW OR AGREED TO IN WRITING

|

||||||

|

WILL ANY COPYRIGHT HOLDER, OR ANY OTHER PARTY WHO MODIFIES AND/OR CONVEYS

|

||||||

|

THE PROGRAM AS PERMITTED ABOVE, BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY

|

||||||

|

GENERAL, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES ARISING OUT OF THE

|

||||||

|

USE OR INABILITY TO USE THE PROGRAM (INCLUDING BUT NOT LIMITED TO LOSS OF

|

||||||

|

DATA OR DATA BEING RENDERED INACCURATE OR LOSSES SUSTAINED BY YOU OR THIRD

|

||||||

|

PARTIES OR A FAILURE OF THE PROGRAM TO OPERATE WITH ANY OTHER PROGRAMS),

|

||||||

|

EVEN IF SUCH HOLDER OR OTHER PARTY HAS BEEN ADVISED OF THE POSSIBILITY OF

|

||||||

|

SUCH DAMAGES.

|

||||||

|

|

||||||

|

17. Interpretation of Sections 15 and 16.

|

||||||

|

|

||||||

|

If the disclaimer of warranty and limitation of liability provided

|

||||||

|

above cannot be given local legal effect according to their terms,

|

||||||

|

reviewing courts shall apply local law that most closely approximates

|

||||||

|

an absolute waiver of all civil liability in connection with the

|

||||||

|

Program, unless a warranty or assumption of liability accompanies a

|

||||||

|

copy of the Program in return for a fee.

|

||||||

|

|

||||||

|

END OF TERMS AND CONDITIONS

|

||||||

196

README.md

Normal file

196

README.md

Normal file

@@ -0,0 +1,196 @@

|

|||||||

|

# youtube-local

|

||||||

|

|

||||||

|

|

||||||

|

youtube-local is a browser-based client written in Python for watching Youtube anonymously and without the lag of the slow page used by Youtube. One of the primary features is that all requests are routed through Tor, except for the video file at googlevideo.com. This is analogous to what HookTube (defunct) and Invidious do, except that you do not have to trust a third-party to respect your privacy. The assumption here is that Google won't put the effort in to incorporate the video file requests into their tracking, as it's not worth pursuing the incredibly small number of users who care about privacy (Tor video routing is also provided as an option). Tor has high latency, so this will not be as fast network-wise as regular Youtube. However, using Tor is optional; when not routing through Tor, video pages may load faster than they do with Youtube's page depending on your browser.

|

||||||

|

|

||||||

|

The Youtube API is not used, so no keys or anything are needed. It uses the same requests as the Youtube webpage.

|

||||||

|

|

||||||

|

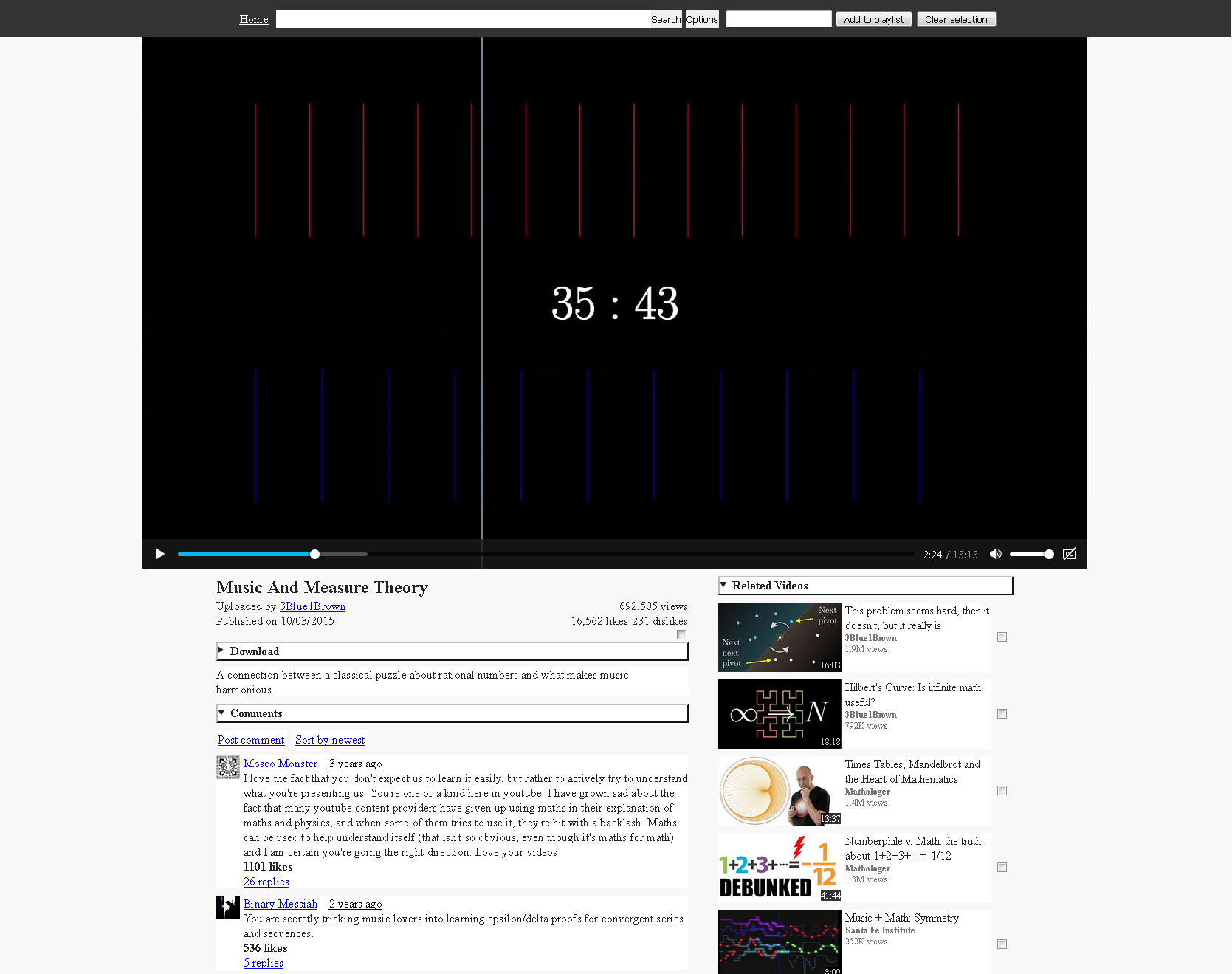

## Screenshots

|

||||||

|

[Gray theme](https://user-images.githubusercontent.com/28744867/64483431-8e1c8e80-d1b6-11e9-999c-14d36ddd582f.png)

|

||||||

|

|

||||||

|

[Dark theme](https://user-images.githubusercontent.com/28744867/64483432-8fe65200-d1b6-11e9-90bd-32869542e32e.png)

|

||||||

|

|

||||||

|

[Non-Theater mode](https://user-images.githubusercontent.com/28744867/64483433-92e14280-d1b6-11e9-9b56-2ef5d64c372f.png)

|

||||||

|

|

||||||

|

[Channel](https://user-images.githubusercontent.com/28744867/64483436-95dc3300-d1b6-11e9-8efc-b19b1f1f3bcf.png)

|

||||||

|

|

||||||

|

[Downloads](https://user-images.githubusercontent.com/28744867/64483437-a2608b80-d1b6-11e9-9e5a-4114391b7304.png)

|

||||||

|

|

||||||

|

## Features

|

||||||

|

* Standard pages of Youtube: search, channels, playlists

|

||||||

|

* Anonymity from Google's tracking by routing requests through Tor

|

||||||

|

* Local playlists: These solve the two problems with creating playlists on Youtube: (1) they're datamined and (2) videos frequently get deleted by Youtube and lost from the playlist, making it very difficult to find a reupload as the title of the deleted video is not displayed.

|

||||||

|

* Themes: Light, Gray, and Dark

|

||||||

|

* Subtitles

|

||||||

|

* Easily download videos or their audio

|

||||||

|

* No ads

|

||||||

|

* View comments

|

||||||

|

* Javascript not required

|

||||||

|

* Theater and non-theater mode

|

||||||

|

* Subscriptions that are independent from Youtube

|

||||||

|

* Can import subscriptions from Youtube

|

||||||

|

* Works by checking channels individually

|

||||||

|

* Can be set to automatically check channels.

|

||||||

|

* For efficiency of requests, frequency of checking is based on how quickly channel posts videos

|

||||||

|

* Can mute channels, so as to have a way to "soft" unsubscribe. Muted channels won't be checked automatically or when using the "Check all" button. Videos from these channels will be hidden.

|

||||||

|

* Can tag subscriptions to organize them or check specific tags

|

||||||

|

* Fast page

|

||||||

|

* No distracting/slow layout rearrangement

|

||||||

|

* No lazy-loading of comments; they are ready instantly.

|

||||||

|

* Settings allow fine-tuned control over when/how comments or related videos are shown:

|

||||||

|

1. Shown by default, with click to hide

|

||||||

|

2. Hidden by default, with click to show

|

||||||

|

3. Never shown

|

||||||

|

* Optionally skip sponsored segments using [SponsorBlock](https://github.com/ajayyy/SponsorBlock)'s API

|

||||||

|

* Custom video speeds

|

||||||

|

* Video transcript

|

||||||

|

* Supports all available video qualities: 144p through 2160p

|

||||||

|

|

||||||

|

## Planned features

|

||||||

|

- [ ] Putting videos from subscriptions or local playlists into the related videos

|

||||||

|

- [x] Information about video (geographic regions, region of Tor exit node, etc)

|

||||||

|

- [ ] Ability to delete playlists

|

||||||

|

- [ ] Auto-saving of local playlist videos

|

||||||

|

- [ ] Import youtube playlist into a local playlist

|

||||||

|

- [ ] Rearrange items of local playlist

|

||||||

|

- [x] Video qualities other than 360p and 720p by muxing video and audio

|

||||||

|

- [ ] Corrected .m4a downloads

|

||||||

|

- [x] Indicate if comments are disabled

|

||||||

|

- [x] Indicate how many comments a video has

|

||||||

|

- [ ] Featured channels page

|

||||||

|

- [ ] Channel comments

|

||||||

|

- [x] Video transcript

|

||||||

|

- [x] Automatic Tor circuit change when blocked

|

||||||

|

- [x] Support &t parameter

|

||||||

|

- [ ] Subscriptions: Option to mark what has been watched

|

||||||

|

- [ ] Subscriptions: Option to filter videos based on keywords in title or description

|

||||||

|

- [ ] Subscriptions: Delete old entries and thumbnails

|

||||||

|

- [ ] Support for more sites, such as Vimeo, Dailymotion, LBRY, etc.

|

||||||

|

|

||||||

|

## Installing

|

||||||

|

|

||||||

|

### Windows

|

||||||

|

|

||||||

|

Download the zip file under the Releases page. Unzip it anywhere you choose.

|

||||||

|

|

||||||

|

### Linux/MacOS

|

||||||

|

|

||||||

|

Download the tarball under the Releases page and extract it. `cd` into the directory and run

|

||||||

|

```

|

||||||

|

pip3 install -r requirements.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

**Note**: If pip isn't installed, first try installing it from your package manager. Make sure you install pip for python 3. For example, the package you need on debian is python3-pip rather than python-pip. If your package manager doesn't provide it, try to install it according to [this answer](https://unix.stackexchange.com/a/182467), but make sure you run `python3 get-pip.py` instead of `python get-pip.py`

|

||||||

|

|

||||||

|

- Arch users can use the [AUR package](https://aur.archlinux.org/packages/youtube-local-git) maintained by @ByJumperX4

|

||||||

|

- RPM-based distros such as Fedora/OpenSUSE/RHEL/CentOS can use the [COPR package](https://copr.fedorainfracloud.org/coprs/anarcoco/youtube-local) maintained by @ByJumperX4

|

||||||

|

|

||||||

|

|

||||||

|

### FreeBSD

|

||||||

|

|

||||||

|

If pip isn't installed, first try installing it from the package manager:

|

||||||

|

```

|

||||||

|

pkg install py39-pip

|

||||||

|

```

|

||||||

|

|

||||||

|

Some packages are unable to compile with pip, install them manually:

|

||||||

|

```

|

||||||

|

pkg install py39-gevent py39-sqlite3

|

||||||

|

```

|

||||||

|

|

||||||

|

Download the tarball under the Releases page and extract it. `cd` into the directory and run

|

||||||

|

```

|

||||||

|

pip install -r requirements.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

**Note**: You may have to start the server redirecting its output to /dev/null to avoid I/O errors:

|

||||||

|

```

|

||||||

|

python3 ./server.py > /dev/null 2>&1 &

|

||||||

|

```

|

||||||

|

|

||||||

|

## Usage

|

||||||

|

|

||||||

|

To run the program on windows, open `run.bat`. On Linux/MacOS, run `python3 server.py`.

|

||||||

|

|

||||||

|